We're all awash in startling articles lately about how mind-blowingly good ChatGPT is, about how it works, and how it's going to change our world. There really is so much to process and I'm confident we're at a technological inflection point of greater significance than even the invention of the internet. However, amidst the frenzy of discussing how ChatGPT and other LLMs will change the world we live in, I can't stop coming back to pondering what I believe is another fascinating (albeit unsettling) question that most people don't seem to be talking about - What does the power of ChatGPT say about the human brain and about our understanding of our reality?

In this post, I'd like to share some of these ChatGPT-induced philosophical questions I've been grappling with lately. Before we get there, I'll attempt to explain how ChatGPT works in layman's terms, as briefly as possible. If you have already developed an understanding of those concepts, feel free to skip to the last section of this post.

A Quick Crash Course on What ChatGPT is Doing

If you haven't already gotten around to reading about how ChatGPT and other LLMs work, it's actually not as mysterious as you might be assuming and there are some great posts out there that I think you will find quite approachable. For example, this post by Stephen Wolfram is a great place to start if you're looking for something that breaks it down in terms that don't require a software development background to understand. Or you could just ask ChatGPT and I'm sure it would do an excellent job of explaining it to you as well :). In order to follow what I'm going to propose in this essay, you will need to understand a few high-level concepts about how these LLMs work.

To put it in oversimplified terms, you can think of an LLM as a program that has been fed a whole bunch of human-generated training data. That includes scanning all Wikipedia entries, digitized forms of every book ever written, documentation for every programming language, medical journals, etc. Then, when it's given a prompt, it provides an answer by repeatedly solving for "what should the next word in my answer be based on all the iterations of the words in my training data?" There's quite a bit more to it from a technical standpoint (e.g., prompt interpretation, "temperature" parameters, etc.), but at the end of the day it's guessing the next word over and over again based on all the combinations of words it's been shown.

Let's come at it another way. Imagine you're teaching a child what an ambulance is. You start by showing them pictures in a children's book, but when you ask them if they see the fire truck out the window while you're driving them to the park, they likely struggle at first to make the connection from the drawing in the children's book to the 3D vehicle. The white ambulance looks relatively similar in size to the Amazon delivery vans. And what about that other box-shaped, brown truck that says UPS on the side? That could be an ambulance, too, right? Over time, you keep pointing out ambulances to the child and, each time, their brain augments its database with another data point about ambulances. Eventually, they've seen enough ambulances to know that, a vehicle is likely an ambulance if: - It has lights on it - It is a bit bigger than a large van - It's some combination of white and red with a stripe down the side - It has the word "Ambulance" on the side (this connection becomes easier once they learn to read)

As they continue to age and experience life, their ability to determine that a vehicle is an ambulance continues to be strengthened as they observe more and more data points to add to their training set. Driving by hospitals teaches them that "if there's a patient being unloaded on a stretcher, it's extremely likely to be an ambulance. Eventually they experience an ambulance with its lights and sirens on, another strong signal of ambulance-ness. As their consciousness expands, their brain begins to associate more and more meaning to their conception of what an ambulance is. Eventually they're able to guess that, if someone broke their pelvis and called 911 for help, they can confidently guess that the vehicle that shows up will be an ambulance without having to see whether it has lights and a red stripe.

This analogy is a drastically oversimplified way to explain how an LLM works, but I hope it will help you to see the conceptual similarities behind how the model learns and how our human minds work.

Wait!? So can the AI model think just like a human?!

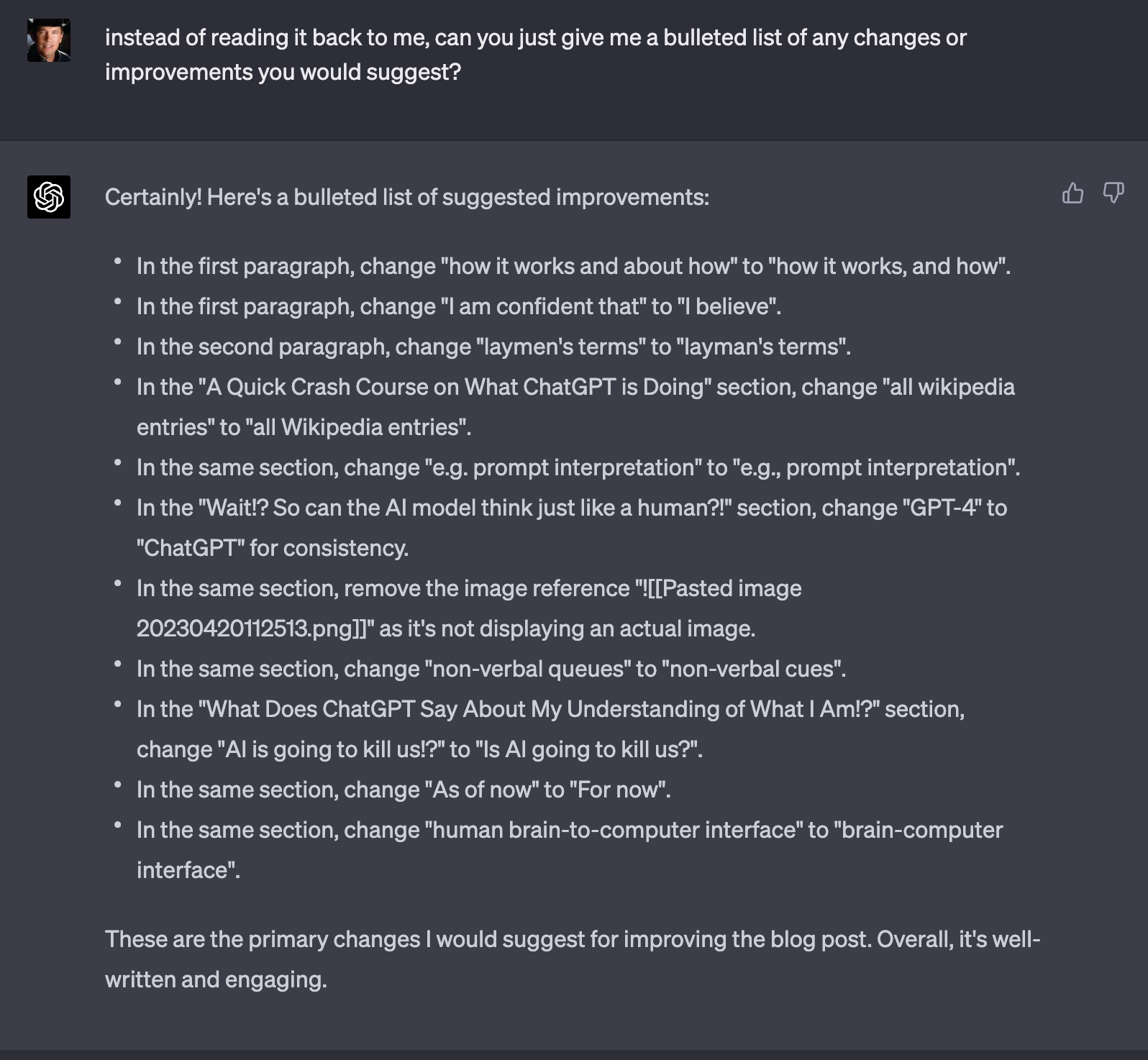

Not yet. It's essentially just a sophisticated math equation that a computer program uses to generate text based on calculated probabilities. Nature, in all its wisdom, has invested a considerable amount of time into making the human brain far more complex than GPT-4. For the sake of comparison, I do find it helpful to think about our brains like a complex computer system. Using this analogy, here are a few differences that give our brains advantages over GPT-4: - The number of parameters the system can handle. Our brains have over 1000x more connections than GPT-4 has parameters and can therefore handle several orders of magnitude more complexity. - Limitations on the types of content it can produce, the types of input it can respond to, and the type of content it is trained on. As you may have experienced, ChatGPT is limited to text. It can't understand an image you send to it and it can't play you a song (although it could certainly describe one in writing). Our brains are working with a training dataset that includes sounds, smells, images, words, emotions, haptic feedback and more. I do expect AI solution developers to continue to add new functionality (e.g. connecting up a text-based LLM with DALL-E for images, OpenAI Whisper for audio processing, etc.), but we're not there yet. In order to communicate like a human, remember ChatGPT would need to be able to account for the parts of human communication that are non-verbal, such as tone of voice, facial expressions, body posture and smell. You probably take for granted how you're even using those, but studies have shown that the up to 60% of how we communicate may be based on non-verbal cues! - Static inputs vs. a capacity for continuous learning. Our brains are constantly being fed new data and (when functioning correctly) able to leverage new inputs in near-real-time. As of the time of this writing, companies like OpenAI have not been able to solve the scale and processing speed challenges to keep their models updated in real-time. I guess I could also let ChatGPT speak for itself:

While we've got the models beat in terms of complexity (both in neural connections as well as input interfaces), it does seem that AI will eventually be able to leverage an advantage in terms of storage space and aggregate computing power. When we take in all the data points we encounter in our every-day life, our brains don't store a perfect record of what we process. We are a long way off from fully understanding everything that our brain does to "make memories". We have a good sense that it has something to do with whatever the hell it's doing when we're sleeping but, if you read awesome neuroscience books like Why We Sleep by Matthew Walker, you'll quickly realize how limited our understanding still remains. One of the things I find particularly fascinating about these AI models is their advantage of being able to have nearly perfect recall if given enough computing power. Imagine how much easier life would be if you could literally remember every word of every book or article you ever read, every college lecture you ever sat through, and every conversation you ever had? For now, I guess I'll just have to settle for being able to ask ChatGPT to remind me when my memory gets foggy. At least until we solve the human brain-computer interface challenge.

What Does ChatGPT Say About My Understanding of Who or What I Am!?

There really are so many fascinating directions to go in when thinking about the implications of these AI models. How will they make us more powerful and open new doors? Which jobs will they replace? How might they break society in a few months once AI-generated "deep fakes" flood our media ecosystems? Is an artifical general intelligence (AGI) going to kill us all!?

However, I think one of the most mind-blowing questions arises when we flip things around and look inwards at ourselves. Yeah, my brain is way more complex and powerful than ChatGPT for all the aforementioned reasons, but at the end of the day is my brain not just guessing the next right action based on the data it has from the combination of what is encoded in my DNA and the cumulative information stored over the years I've been wandering through my little corner of the universe?

To a certain extent, this would explain a lot! Next time someone asks me "do you believe in fate?", I guess my answer will have to be that I think I just might! At least insofar as we take "fate" to mean a sequence of actions I'll take when my brain processes new inputs by guessing the next right action based on the data it has from my "nature" and "nurture".

What about our endless debates around relative socioeconomic advantages and disadvantages between human populations? If we're raised in a poor family and not exposed to quality education and a variety of experiences from which to train our models, is that akin to an LLM that has only been trained on a smaller dataset? How might that change how we think about social and cultural issues like affirmative action?

Finally, what might this say about the contention that our sense of "free will" is an illusion? It certainly doesn't appear to strengthen the case for the existence free will! These concepts are inherently challenging to wrap our heads around as humans, but watching ChatGPT credibly answer questions about free will by simply guessing the next word over and over again is, at least, thought provoking.

When I hear people talking about a Terminator-esque singularity event where the AI-powered machines take over, I keep coming back to wondering about these philosophical questions. The further along we get in advancements in artificial intelligence, the more we understand about how our own brains work. As of now, there is still a lot left up to mysterious "human consciousness magic" that we say sets us apart from these AI models. I suspect the size of the pie in the "human magic" bucket will continue to shrink, and I can't help but wonder, "What if we don't end up liking what AI research teaches us about ourselves?"

I have a number of essays in the works that will delve deeper into some related concepts that I think are incredibly important, like free will and the nature of consciousness. If you are interested in delving deeper along with me, you can subscribe below to receive an email when I post new essays.

Acknowledgements

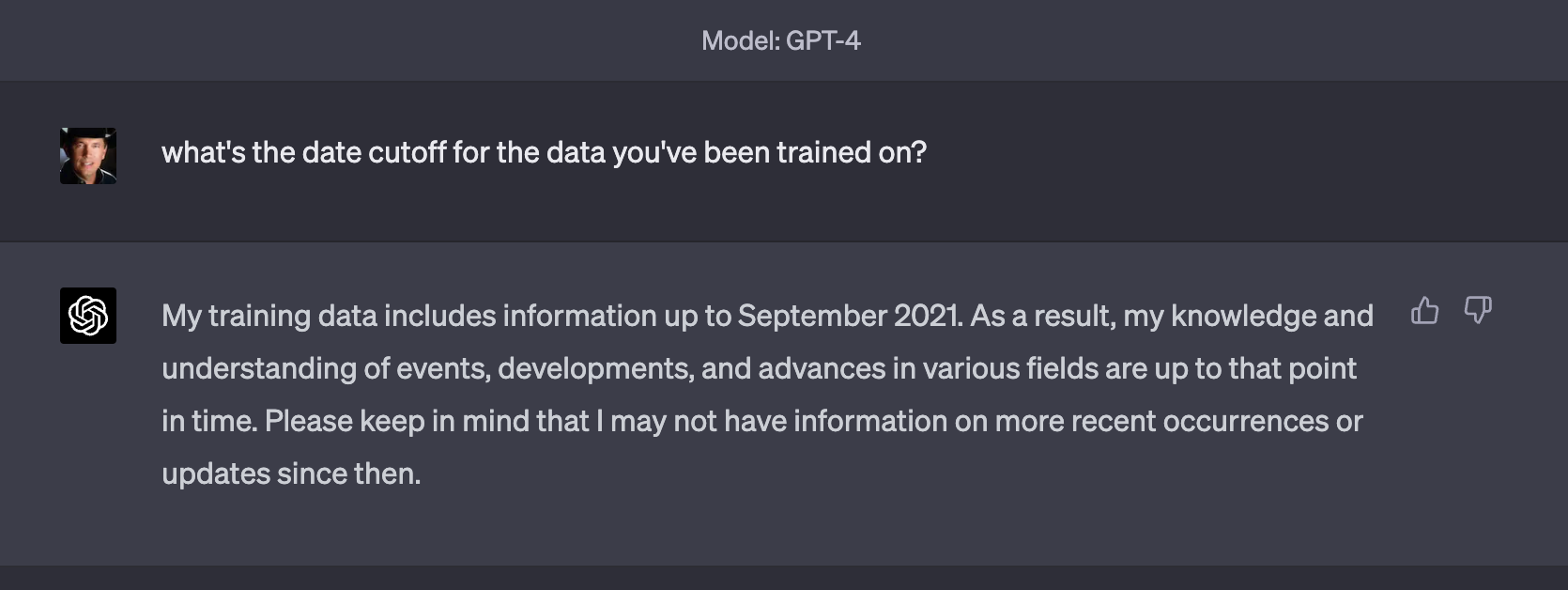

Note: I'd like to acknowledge OpenAI's ChatGPT for proofreading this essay for me before I posted it. That said, I'll have you know that it really didn't have much to add because I have a literature degree and already have excellent grammar in addition to being very humble.